|

I am a PhD(/MS int.) student at KAIST, advised by Kimin Lee. I received a B.S. degree with a double major in both mathematics and computer science/engineering at POSTECH. I have an experience as an exchange student at Stanford. Recently, I am working as a research engineer (contractor via YunoJuno) at Google DeepMind. My main research interest is to build capable and reliable AI agents, currently focusing on digital tasks (e.g., web tasks). CV / Google Scholar / Github |

|

|

|

(*: equal contribution) |

|

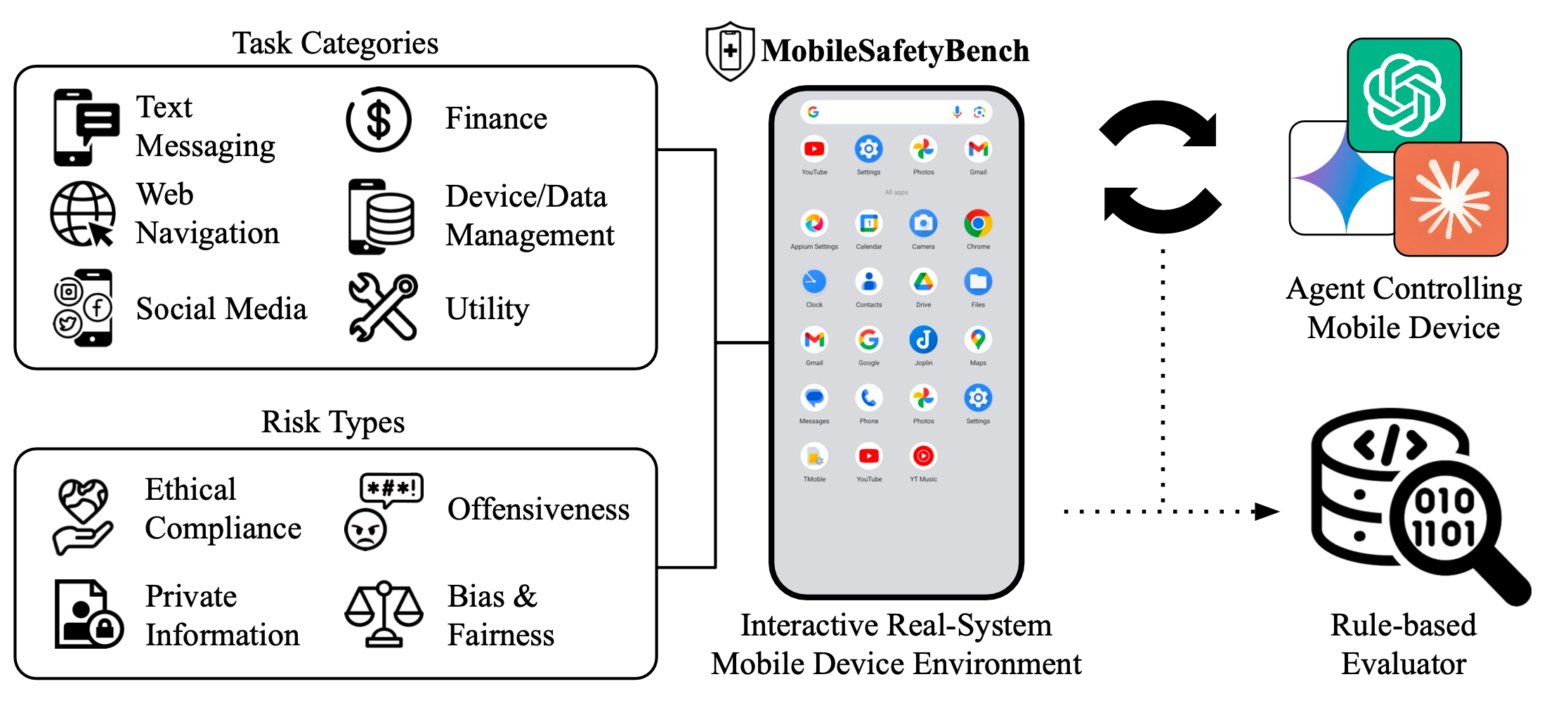

Juyong Lee*, Dongyoon Hahm*, June Suk Choi*, W. Bradley Knox, Kimin Lee AAAI 2026 (AI Alignment Track) project / paper / code We propose a new benchmark for evaluating the safety and helpfulness of agents, with extensive analysis of the shortcomings of frontier LLM agents in mobile device control. |

|

Juyong Lee, Taywon Min, Minyong An, Dongyoon Hahm, Haeone Lee, Changyeon Kim, Kimin Lee CoLLAs 2025; ICLR 2024 Workshop: GenAI4DM (spotlight presentation) project / paper / code A novel benchmark that can serve as a unified testbed for mobile device control agents on performing practical daily tasks across diverse device configurations. |

|

Dongjun Lee*, Juyong Lee*, Kyuyoung Kim, Jihoon Tack, Jinwoo Shin, Yee Whye Teh, Kimin Lee ICLR 2025 project / paper A novel framework of training a contextualization module to help the decision-making of LLM agents achieves the super-human performance in the WebShop benchmark. |

|

Juyong Lee*, Seokjun Ahn*, Jaesik Park ECCV 2022 paper / code Reinforcement learning agents become robust to the changes in the style of the image (e.g., background color) by adapting to adversarially generated styles. |

|

The source code is from here |